Computational Geodynamics

Nowadays, computer simulation is established as another pillar of scientific discovery, besides observation/experiment and theory. The main focus for computer experiments in the Geodynamics group is simulation of the convective processes in the Earth's mantle. The equations of fluid dynamics in the mantle (conservation of mass, momentum and energy) are well understood. Many powerful numerical methods exist to solve these. Yet, simulating mantle convection on a global scale with adequate resolution is beyond the capabilities of even the most advanced computers. This difficulty arises from the nature of mantle convection. It requires to deal with aspects such as:

(1) scale disparity, i.e. small structures need to be resolved in a global convective system, (2) 3D-variations in rheology and composition, (3) complex thermodynamic features of mantle minerals and (4) the feedback between the dynamic flow system and its boundaries (the surface and the core-mantle boundary).

Further difficulties arise because some processes are active on a microscopic scale, for example the evolution of mineral grain size. Even in the best case, their effect on the macroscopic flow can often be incorporated only by parametrization. This leads to a complex picture of the mantle, where different length and time scales need to be resolved in numerical simulations.

For this reason, high-performance computing (HPC) is an essential element of top level research in Geodynamics.

Projects and High-Performance Computing

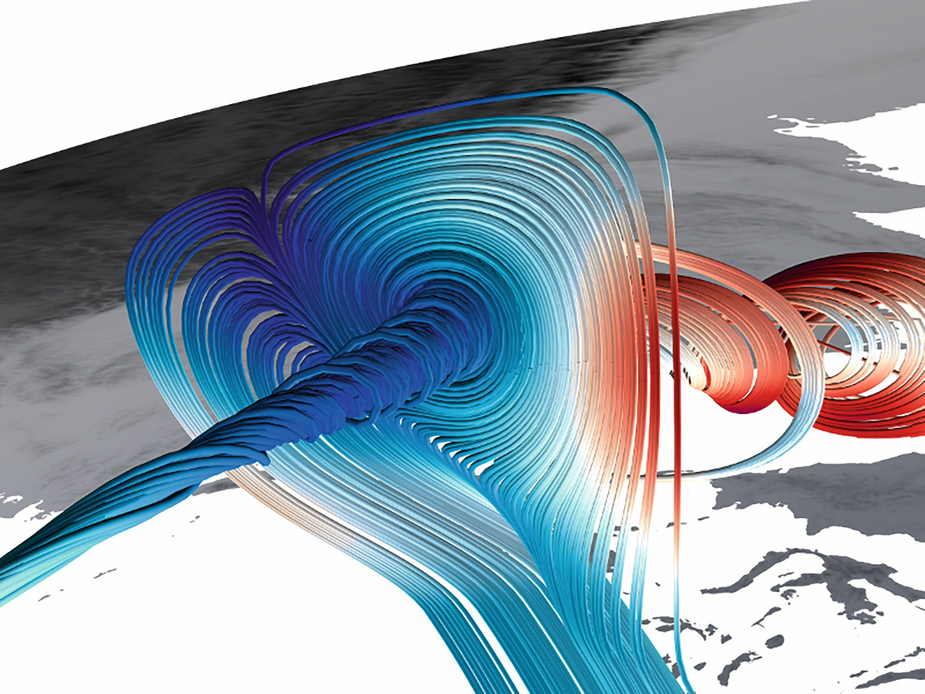

From the very beginning in 2003, the Munich Geodynamics group has been actively involved in a number of HPC- and IT-related projects. Thus, there naturally is a close cooperation with the GeoComputing group. Joint work includes research on stereoscopic visualization (GeoWall), data storage and management, as well as numerical and parallelization techniques and the design of compute clusters for capacity computing. Current efforts are focused on the realization of a software framework for extreme scale Earth Mantle simulations. The framework is able to fully exploit the power of next-generation exascale (i.e. reaching 1018 floating point operations per second) supercomputers. First exciting results have been achieved in the project TerraNeo, supported by the Priority Program 1648 "Software for Exascale Computing" (SPPEXA) of the German Research Foundation (DFG). Using a massively parallel multigrid method, based on the hierarchical hybrid grids (HHG) paradigm and implemented in the open source framework Hybrid Tetrahedral Grids (HyTeG), computations with an unprecedented resolution of the Earth’s Mantle are possible. The computational grids subdivide the entire mantle into domains of about 1 km3 volume (compare this to the volume of the mantle of about 9.06x1012 km3). As a consequence, a large system with over a trillion (1012) degrees of freedom gets solved at each time step of a simulation. This demonstrates why mantle convection is regarded as a so-called grand challenge problem. The success of the TerraNeo project is based on a long-term collaborative effort between the groups of Geophysics (LMU Munich), Numerical Mathematics (Prof. Barbara Wohlmuth, TU Munich), and System Simulation (Prof. Ulrich Rüde, FAU Erlangen-Nürnberg).

Computational Infrastructure

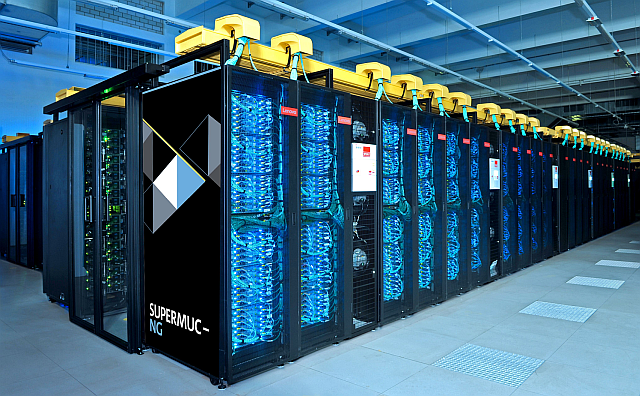

In order to test software and perform mid-scale geophysical simulations in a routine manner, the GeoComputing group operates an excellent computational infrastructure. At its very center is the Munich Tectonic High-Performance Simulator (TETHYS). Hence a lot of capacity computing can be done in-house. For capability computing, i.e. extremely demanding simulations, we are granted access to high-end supercomputing facilities by the Leibniz Supercomputing Centre (LRZ), especially its flagship supercomputer SuperMUC-NG. Altogether, this ensures that scientific progress in our group is supported by world-leading expertise in the development and application of computational resources.